@tridge I apologize for being a bit late for this discussion — it took me a bit of time to dig through the extensive background and understand where ArduPilot is coming from. The worst thing I could do in this situation is to give a quick knee-jerk response. Let me know if you think I missed anything critical from the preceding conversation.

Common ground

Everyone involved in this conversation or who would like to get involved should first understand what DS-015 is and what it is not. Please, do not post anything regarding DS-015 until you have thoroughly familiarized yourself with the following sources (I know that @tridge, @dagar, and @coder_kalyan have already done so):

- https://uavcan.org (the text on the main page explains what to expect from UAVCAN v1)

- https://uavcan.org/specification (reading the first two chapters will suffice)

- The Cyphal Guide - Applications & Usage - OpenCyphal Forum

- Choosing Message and Service IDs (more on this below)

- https://github.com/Dronecode/SIG-CAN-Drone/blob/main/DS-015%20UAVCAN%20Drone%20Standard%20v1.0.pdf

I want to set the foundation by listing certain points that I believe we can easily agree on.

First, this conversation is not about UAVCAN v1. I think both @tridge and I agree that ArduPilot, and the entire ecosystem around it, will definitely benefit from supporting v1 because it does fix many issues that used to be present in v0. For instance, v1 allows one to modify data types without breaking wire compatibility (which has already been successfully demonstrated); also, it is transport-agnostic, which will become important in the longer term. This conversation, however, is about the application-layer communication standard built on top of UAVCAN v1. Unlike v0, the stable version does not address any application-layer objectives; instead, it delegates this task to higher-level standards. DS-015 is one such standard. There may be others. One such standard may be crafted by the ArduPilot team independently from anyone else (although I would gladly offer my best advice if they welcome it); we will be referring to this hypothetical entity as “APCAN”.

Second, DS-015 is not the best choice for building sensor networks, just as a microscope is a poor choice of tool for hammering in nails. The criticism provided by Tridge is entirely correct — if you take a thing designed to do X and apply it for task Y you should expect suboptimal results. It saddens me that the critique is so off the mark, I take it that I should have done a better job at explaining why DS-015 is designed this way. I will try to correct this, but in return, I would like to ask you to do your part by honestly zooming out without holding onto your existing preconceptions.

Third, we should strive to unify our requirements instead of building APCAN next to DS-015, as the fork will be beneficial no neither party. Find my proposal at the end of this post.

What DS-015 is not?

It is not a replacement for I2C, SPI, UART, or UAVCAN v0. Much of the frustration in @tridge’s post comes from incorrectly set expectations.

Let’s say, you have been using a hammer for a long while. It wasn’t a great hammer, but it was just good enough to do its job. Then I came along, took your hammer away, and gave you a new screwdriver as a replacement. You looked at me in bewilderment, asking if I have completely gone insane, because how are you supposed to hammer in nails using that.

You can’t use idiomatic DS-015 for ferrying sensor measurements or actuator commands between the flight controller and its peripherals.

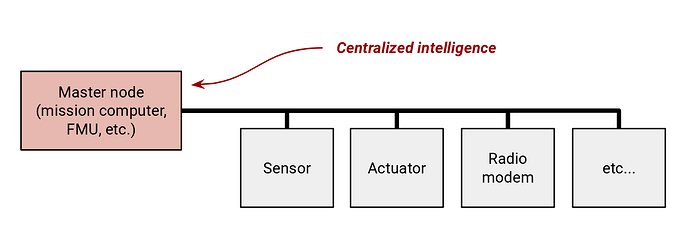

UAVCAN v0 integrates with your flight controller at the driver layer. DS-015 integrates with your flight controller at the layer of its business logic because the other network participants become part of the high-level control processes that used to be concentrated exclusively in the autopilot. This is what you can build using v0, but not idiomatic DS-015:

UAVCAN v0 is built for data exchange. DS-015 is built on the modern theory of distributed computing. @coder_kalyan has done a decent job summarizing the basics — partly repeating the UAVCAN Guide — so I will omit the details.

No more data type identifiers

We need to correct a critical error of interpretation that I spot in these posts (note the added emphasis):

These passages make me realize that I have probably done a poor job writing section Semantic segregation of the Interface Design Guidelines, introduction to the DS-015 standard, and the chapter “Basic concepts” of the UAVCAN Specification because all of these were supposed to address or prevent this misunderstanding. Let me quote the relevant bit from The Guide:

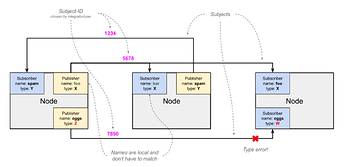

Instantiating a service necessarily involves assigning its subjects and UAVCAN-services certain specific port-identifiers at the discretion of the implementer (for example, configuring an air data computer of an aircraft to publish its estimates as defined by the air data service contract over specific subject-IDs chosen by the integrator).

Excepting special use cases, the port-ID assignment is necessarily removed from the scope of service specification because its inclusion would render the service inherently less composable and less reusable, and additionally forcing the service designer to decide in advance that there should be at most one instance of the said service per network. While it is possible to embed the means of instance identification into the service contract itself (for example, by extending the data types with a numerical instance identifier like it is sometimes done in DDS keyed topics), this practice is ill-advised because it constitutes a leaky abstraction that couples the service instance identification with its domain objects.

So what does it mean practically? Here is an example. Suppose you want to connect a differential pressure sensor to a legacy UAVCAN v0 network. You would make a data type that might look as follows:

uint8 sensor_id # Which specific sensor is it?

float32 pressure_difference # [pascal]

You would assign it a data type ID, let’s say, 20001. Then, when integrating a new sensor into the network, you configure it, defining which value of sensor_id should it publish. Alternatively, you could use the node-ID of the sensor to differentiate its data from other sensors of the same kind.

None of these methods work in UAVCAN v1: there are no data type identifiers. Further, the Guide also explains why the node-ID should not be used at the application layer (excepting special scenarios that are not related to this discussion).

In UAVCAN v1, your data type would look as follows:

float32 pressure_difference # [pascal]

Or, since it is just a physics thing, you could just use uavcan.si.unit.pressure.Scalar.1.0 to the same effect.

Okay, but if there is no data type identifier, then how does your sensor know how to publish the data, and how does your subscriber (e.g., the autopilot) know where to look for this data? The nodes are configured at the time of their integration into the system. UAVCAN v1 fixes the so-called syntax-semantic entanglement problem that was, by far, the worst offender among the design deficiencies present in v0.

UAVCAN v1 offers port-identifiers as a replacement, but they cannot be set statically at the data type definition level, as explained in the linked resources. Exceptions are given for some standard data type definitions, but only that — a vendor cannot define a data type with a fixed identifier. Section “Basic concepts” of the Specification explains why.

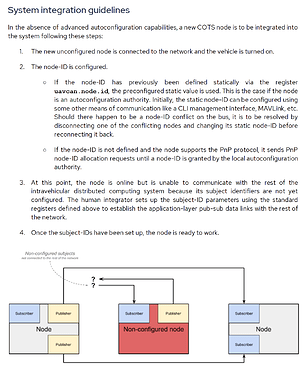

This means that the user of your differential pressure sensor cannot possibly integrate the unit into the system without first configuring the subject-ID at which its data should be published; said configuration is also performed via UAVCAN using the Register Interface (no need to craft additional user interfaces). Did you miss this point?

It is also possible to automate the port-ID assignment in certain scenarios, although this is expected to be of limited utility in general. There is an unfinished proposal that can be resurrected if there is interest.

If you are surprised by this, please stop now and read this discussion before continuing, because it is absolutely instrumental for us to have a sensible conversation:

To have a hands-on experience with the computation graph offered by UAVCAN v1, please run this Python demo on your local computer (works best with GNU/Linux): https://pyuavcan.readthedocs.io/en/stable/pages/demo.html. Then also run the DS-015 servo demo to see the embedded side of the same problem.

I hope the questions that I quoted at the beginning of this section are now answered.

No more sensor nodes

UAVCAN v1 is built to enable (hard) real-time distributed computing. Distribution enables one to construct more complex systems using less complex individual parts. This point, in essence, mirrors the old debate about the differences between monolithic and microkernels in OS design, which I presume most are well-familiar with. If you want a more in-depth discussion of this aspect, refer to the Guide.

DS-015 takes advantage of the capabilities offered by v1, bringing the specifics of one particular domain (drones) together with UAVCAN such that the tasks that are pervasive in this domain are addressed consistently in a way that is easy to standardize around.

While plug-and-play is, generally, in the scope of DS-015, one should not expect it to be as extensive as in v0. Since we are talking about a distributed system, expecting it to be entirely plug-and-play is akin to expecting the autopilot firmware to write itself.

Similar principles of distribution and compartmentalization stand behind ARINC 653, which also enables one to construct independently certifiable components, simplifying the maintenance and upgrade of the system. The benefits of such architectures do not necessarily need to be confined to safety-certifiable systems only, of course.

DS-015 assumes that every component is an independent agent that works in collaboration with its peers, such that the role of the underlying UAVCAN v1 is similar to IPC. An existing piece of COTS UAVCAN v0 drone hardware can be made DS-015 compliant by merely swapping its software, without the need to alter the hardware (excepting, perhaps, some marginal scenarios I am not aware of). However, the software does become more complex, which is acknowledged. I presume that vendors of low-cost drone hardware who don’t have access to adequate software development expertise won’t be able to pull it off unless we provided them with extensive support, perhaps in a fashion similar to AP_Periph. The UAVCAN Consortium is well-equipped for this type of collaboration.

The following quote from an adjacent topic reveals critical misunderstanding:

There are several issues:

-

UAVCAN v1 does not really say anything about sensor nodes, because it is below the layer of abstraction where this distinction makes sense. In UAVCAN v1, there are just nodes. You may call them sensor nodes if you want, this is fine.

-

DS-015 models physical processes and subsystems instead of sensors. Calling a DS-015 node a “sensor node” is like calling a public Java method a “subroutine”: it is either wrong, or it is evidence of poor design. Idiomatic DS-015 assumes that you hide your sensor behind a higher-layer abstraction. The airspeed estimation with the IAS/CAS debate is a good example of this distinction.

We are equipped now to address the specific problems listed in the OP post:

Do you also need to analyze the raw current measurements made by the ESCs? Unless you have any highly non-trivial requirements I am not aware of, I don’t expect the need to analyze the raw data of every sensor to persist once you adopt the distributed mindset.

The logging aspect is handled by UAVCAN itself, which assumes publishing diagnostic data (such as internal states or informational messages) at a low priority level. We will talk about this more in the thread about the transition from v0 to v1.

This is not really a deficiency of DS-015, but rather a case of incompatible requirements. More on this in the next section.

If this is manageable for the autopilot, then it is manageable for the air data computer node as well.

Your requirements are incompatible with idiomatic DS-015

I understand that what you are looking for at this moment has little to do with idiomatic DS-015. While I am confident that sooner or later you will acknowledge the benefits of distributed architecture outside of the most trivial scenarios, at the moment you need a simpler solution. To this end, @dagar has suggested a middle-ground solution that is mostly valid and can be implemented without causing undue fragmentation of the ecosystem.

A basic airspeed sensor node (sic!) can be easily implemented using the physics data types provided by DS-015 and the standard uavcan.si namespace. This does not agree with idiomatic DS-015 but it will be built on the same basic foundations and opens a solid path for an eventual transition to DS-015 for adopters who find value in it.

Taking our airspeed sensor node (that is, taking the low-level approach as opposed to the alternative offered by DS-015) as an example, we could conceivably make it publish on the following subjects:

| Subject | Type |

|---|---|

differential_pressure |

uavcan.si.unit.pressure.Scalar.1.0 |

outside_air_temperature |

uavcan.si.unit.temperature.Scalar.1.0 |

You could also take the more complex types from the physics namespace. We could also alter them or define new ones. We are entirely open to extending the reg.drone.physics namespace to suit your requirements.

There is another alternative that is not mutually exclusive with the above. Your usage of UAVCAN does not really allow you to leverage the architectural advantages it offers — you are treating it as a point-to-point, star topology network (I am talking about the application layer topology here) to mimic I2C/SPI. In this case, we could also consider defining an additional profile next to reg.drone specifically for tunneling I2C, SPI, and possibly other protocols over UAVCAN. This way you could plug UAVCAN v1 as a backend for one of your I2C/SPI sensor drivers, which I suppose should be relatively easy to do.

Here is a call to action for you: please define a list of data objects that the airspeed sensor node has to publish and subscribe to. Then we will work together to make this design aligned with the DS-015 type system. It won’t be idiomatic DS-015, but at least it will rest on the same foundation using the same type library, opening the path for future convergence. I would like you to postpone forming premature opinions about the results of this experiment until it is concluded.

Other applications can benefit from idiomatic DS-015

As I wrote in my last email, the UAVCAN Consortium is inclined to fund work on implementing the support for idiomatic DS-015 on the ArduPilot side. The first step might be focused on supporting DS-015 actuators. I would like to gauge your opinion on this and whether you would be open to accepting high-quality contributions to this end. I do not expect this work to burden the core dev team beyond reviewing pull requests.

Meta: about this discussion

I would like to invite everyone to keep the conversation constructive and strictly on-point. Please desist from posting anecdotes and “+1”-style responses that do not add new information. Also, I would kindly ask everyone to avoid sharing opinions about DS-015 or UAVCAN v1 until you have at least a basic understanding of what these are.

We aspire to somewhat higher standards of discourse than some of the newly registered users might be used to. If this seems new, consider reading the FAQ.