One of the key things that will determine if v1 gains traction or if it causes users to regret ever considering using it is how well we handle the transition between v0 and v1, and also handle mixed networks.

We should expect that v0 will be common on production vehicles in the UAS space for at least the next 3 years, and 1MBit operation will be common for even longer. There will be lots of setups that are pure v1 at high bitrates before then (or at least I hope there will be!), but for many production environments making the switch will be a slow process. I’m opening this topic to have a place to discuss how we will handle this transition period. My apologies if this is already covered elsewhere that I have missed. Note that it is a lot more than just the wire protocol level compatibility that is the issue.

Types of mixed networks

There are several quite distinct types of mixing that will matter:

- mixtures of nodes that can only do v0 with v1 capable nodes

- a single sensor node that is configured to broadcast the same data both as v0 and v1

- an autopilot configured to accept both v0 and v1 sensors

- mixtures of v1 nodes that are FDCAN capable and those that are not

- mixtures of data consumers (of which autopilots are one example) that have different capabilities, such as companion computers or safety systems that only understand v0 while the flight controller understands v1.

I know that my nomenclature with things like “sensor node” goes against the philosophy of v1. That is quite deliberate. Users will see a physical device on their vehicle and the primary purpose of the device is providing sensor data. That is a “sensor node”. Hiding that distinction does not help.

Key Issues

A whole pile of interesting (and likely frustrating) issues will come up with the various types of mixing. Some of the key ones are:

- the existing of diagnostic tools (such as config and bus monitoring tools) that understand these mixed networks and can help system integrators navigate the issues

- alignment of node identification between v0 and v1

- automatic upgrading of bitrates on FDCAN if all nodes are capable of higher rates

- possibly support the “cover your ears now” method of doing high data rates when one or more node on the network can’t handle high rates

- proper testing of mixed network scenarios so we discover the issues before it bites our end users (who may have pretty complex setups)

Diagnostic Tools

In the ArduPilot world users either use uavcan_gui_tool or they use MissionPlanner to analyse what is happening on their CAN buses. Both have packet inspectors, firmware update UIs, parameter control UIs etc.

We need to be able to point users at diagnostic tools that can handle all of the above mixed network scenarios, and gives them clear information on what is happening so they can diagnose and fix issues. That means the tools need to either bind to two separate APIs and combine them, or have a single API that can handle both v0 and v1. The display needs to make it really clear when v0 or v1 is being used in each packet (more colors?) and needs to have filtering capabilities to allow them to be separated. It is not really good enough to have to launch separate tools for v0 and v1.

We also need these tools to be smart enough to make it easy to relate a packet they are seeing in the bus trace to a physical device. The abstractions in v1 may make this harder.

Being able to save a full bus trace in a format that can be uploaded to a forum for analysis will also be very useful, much like we use tlog for mavlink logs.

Node Alignment

One potentially tricky issue is nodes that are broadcasting the same sensor data as both v0 and v1. If a flight controller is on the network it needs to know that it is a duplicate or we will end up double fusing the data, which would not be good. Even just the user seeing on their ground stations “you have 3 GPS modules” when they only have 2 physically connected is something we should avoid.

We’ve had this issue in the past with things like the Fix and Fix2 GNSS messages in v0. For that what ArduPilot does is keeps track of whether it has ever received a Fix2 from a particular node, and if it has then it will discard any Fix messages from that node. That prevents the double fusion of the data for that special case.

How do we do this for mixed v0/v1? I must admit that I’m still quite fuzzy on the node ID stuff in v1, but the bit that I think I may understand makes me nervous about this case. How can we robustly detect that a v0 GNSS packet is a duplicate of a packet from the same node that came is as v1? I do hope the answer is not going to be that we shouldn’t support such mixing, as that would just cause v1 deployment to be deferred a lot longer.

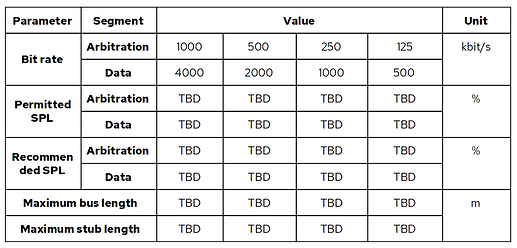

Bitrate Negotiation

We really should aim for as much of this mixed network stuff to be automatic as possible. That includes the bitrate negotiation. Ideally the user should find that if they only plug in nodes that are capable of higher bitrates, that the default action should be that all the nodes automatically switch to the higher rate. It should be possible to configure nodes not to do this, but I’d like to see if we can do it automatically by default.

It is made tricky because it isn’t just a matter of whether the node has a FDCAN capable peripheral, we also have to know that their transceiver is capable of higher rates. For example, the pretty common TJA015 is only rated at 5MBit, whereas a lot of boards (likely most high end boards?) have transceivers capable of 8MBit.

It is also complicated by the fact that most UAVCAN sensors currently commercially available are only capable of 1MBit. We hope we can do updated firmwares for these that will make them v1 capable, but we can’t make them capable of more than 1MBit. We should not be asking our users to throw out their existing equipment, so we need to cope gracefully while these are on the network.

Cover your Ears Method

Sometimes we will really want higher bitrates for a bulk transfer (eg. firmware update) while there is still a node on the network that can’t handle high bitrates. One possible way to handle this is for the initiator of the transfer to send out a broadcast message effectively saying “everyone who is not 8MBit capable please stop listening for the next NNN milliseconds”. You wouldn’t want to do this while flying, but for ground config it could make things a lot faster. Do we want to try and implement this?

That should be enough to get this discussion going. It is certainly not a comprehensive list of the issues we’re likely to encounter as we start to add v1 to the network, but it should give some starting points for discussion.