What are your thoughts on routing Cyphal service requests and responses over Multiple Networks? What questions and scenarios should we be considering when developing the Cyphal/UDP specification?

I’m working with Scott Dixon (@scottdixon) and just started looking at the development of the Cyphal/UDP specification.

I see there is some current development and proposals in forum posts by Pavel (see 1, 2, 3 below), but I’m unsure if there is more work already completed or researched, specifically around how transfers will be routed through a network. From what I have read it looks like we can use multicast as a specialized broadcast to cover subjects and messaging and we can use ports for service. But how will we address a destination server for requests and responses?

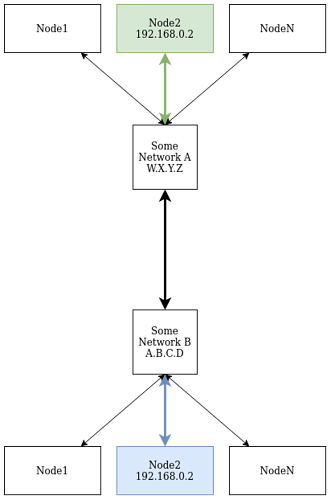

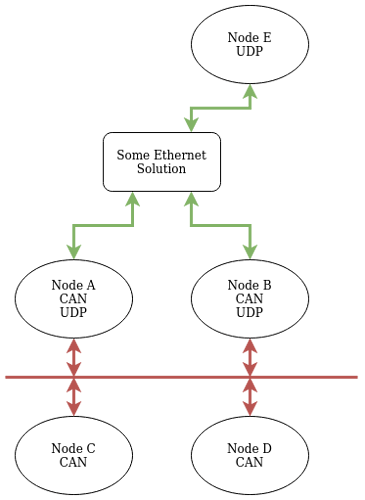

For example, if we have two local networks A and B that are connected over UDP where each network A and B contains multiple Nodes how will a node Node2 on network A (nodeID = 2) address a node Node2 on network B? See the image below. This simple case, we could conceivably make a more complicated set of networks and nodes, but this case should be enough to get into some discussions.

Within each of the networks simply utilizing the last 2 octets of the IP address should suffice (as described in source 2 by Pavel) for addressing and routing, but outside of the network we need some way to also encode the network ID.

My naive approach to solving problem this assumes that we are in a controlled embedded system and that we can use statically pre-configured routing tables and some port forwarding to address nodes across networks (NetworkA:Node2 → NetworkB:Node2). However, that might interfere with how ports would be used locally for subjects and services.