My apologies if I misuse RPC/service transfer, etc.

edit/disclaimer if certain node IDs are already reserved we can just choose different numbers, this is mostly for example and discussion.

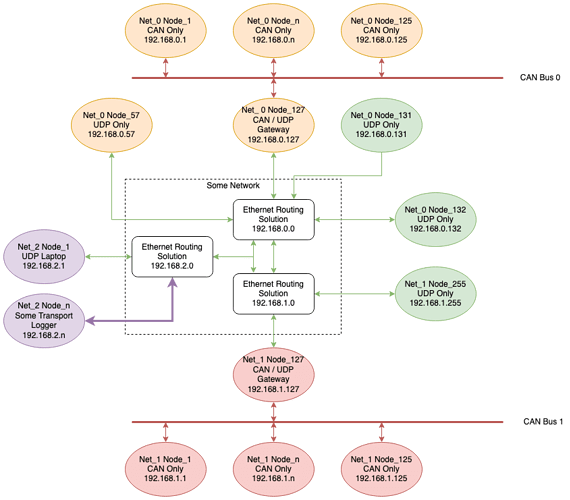

What about a convention/heuristic with routing or bridging that converts between CAN Node ID and IP address Node ID. edit: and put routing and bridging together as a gateway.

Assuming IP address format A.B.C.X

ABC defines the target network (as usual in IP) where A and B are static for local networks and C varies as necessary

X: 0-125 defines a CAN only node or a UDP only node

X: 126 reserved for external traffic

X: 127 defines a CAN / UDP gateway (bridge/router)

X: 126-256 defines a UDP only node

Gateway responsibilities:

- Route/Forward broadcast messages

- Bridge for RPC / service

- Push network traffic to a designated logging node

With the transport + gateway/bridge/router we have the following:

CAN Only nodes can only initiate RPC with nodes on their network (Net_1) regardless of transport via the gateway as long as the Node IDs are compatible. E.g. Net_0 Node_1 can initiate an RPC with CAN to Net_0 Node_57 via Net_0 Node_127, but not to Net_0 Node_132.

A broadcast from Net_1 Node_132 will be received as a broadcast from Net_1 Node_127 to CAN nodes on Net_1 (CAN Bus 1). That same broadcast would be received as a broadcast from Net_0 Node_127 to CAN nodes on Net_0 (CAN Bus 0).

All traffic on UDP nodes is forwarded to the Net_2 Node_n logger. CAN traffic on Net_1 would be forwarded by the Net_1 Node_127 gateway node to the logger, similar with Net_0 and its gateway.

For UDP to CAN RPC we can use a reserved Node ID (or set of reserved IDs) to not confuse CAN bus for external traffic. For example with a Laptop (but this could be any UDP Node)

Laptop connects to Net_2 as Node_1

Laptop starts communications with Net_1 Node_n via Net_1 Node_127

Gateway Net_1 Node_127 sets Net_2 Node_1 as Net_1 Node_126 to CAN Bus 1

Net_1 Node_n uses destination Node_126 and source Node_n for RPC

Gateway converts Node_126 back to Net_2 Node_1

If we didn’t use a reserved Node ID then Net_1 Node_n would use destination Node_1 and source Node_n which might confuse Net_1 Node_1?

Alternatively it could use Net_1 Node_127 (the gateway) as the reserved external traffic node. Or perhaps reserve 123-126 (multiple external traffic nodes).

Maybe I am thinking about this wrong and this is becoming too stateful? There may be some more optimal ways to actually do this with existing message types, etc.

edit we could probably just use ports instead of reserved Node IDs, right?