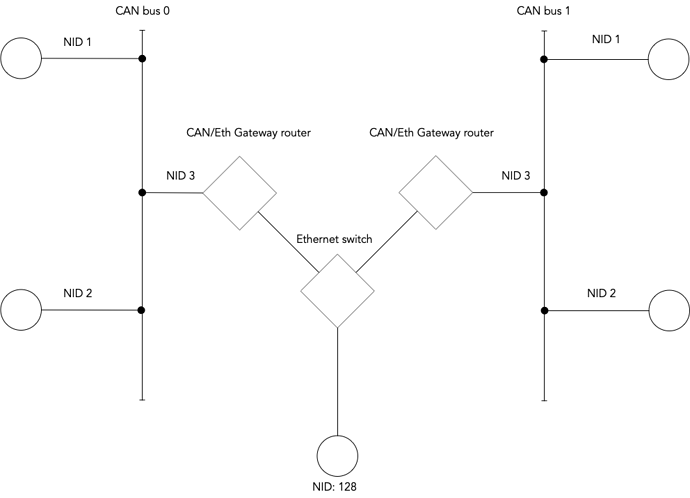

Using Scott’s diagram from a couple of posts ago:

In regards to the problem of the “router node” approach not handling service transfers, one possibly naive solution might be to encapsulate the protocol in cases of cross network communication to include the extra bits needed for the transport layer. So, let’s say we have NID1 on bus 0 and it wants to send a transfer to NID1 on bus 1, we can use a special routing service id X. Each node should have a routing table. So in the graph we will have:

Bus 0 NID 1:

Bus 0 → local

- → Bus 0 NID 3 (star means all others here)

Bus 0 NID 3:

Bus 0 → local

Eth → local

Bus 1 → Bus 1 NID 3

Bus 1 NID 1:

Bus 0 → Bus 1 NID 3

Eth → Bus 1 NID 3

Bus 1 → local

To send a service transfer, Bus 0 NID 1 will craft the following request:

service(type X, src:NID1, dst:NID3 [srcBus: bus0, dstBus: bus1, actual service(type Y, src:NID1, dst:NID1)])

where [ ] is the data field.

Bus 0 NID3 will receive this transfer on the local bus (bus 0) and see that it is a routing service type X, then it will look at the data and see that the destination bus is Bus 1. Bus 0 NID3 will look at its routing table and determine that it needs to send the transfer over UDP to Bus 1 NID3. The data that was sent to it is going to be in the data section of the UDP transfer. When Bus 1 NID 3 receives the transfer, it will look inside the data and see that it needs to be sent to the local bus 1 NID1. The bus 1 NID 1 will have enough information to send a response to bus 0 NID 1.

The way to think of this approach is that we are recreating the IP address of each node by using some extra bits in the data field for the network mask. So, if we operate in 192.168.x.x then we can say that bus 0 is 192.168.0.x, bus 1 is 192.168.1.x and the Ethernet network is 192.168.2.x and all the IP can be directly mapped from the node id to an IP and back (simplified by keeping it to a 24 bit mask, but 25 fits better to the 128 NID/IP limit)

If we set the number of bits for the src/dst bus in the above service transfer to 25 bits, we could talk to the entire internet(!) with the only limitation that a local network on a bus cannot have more than 128 IPs. As an optimization, we could choose a number smaller than 25 bits by fixing the first Y bits (e.g. fixing 192.168.0 as the prefix would allow us to talk to two networks and have a single bit overhead)

A nice feature of this approach is that it adds no overhead for intra-bus communication. You only pay for the cross network communication if you request it.