Agreed. I’m concerned about the large amount of periodic data the simple periodic broadcast could add to a bus. While we can send it at a low priority it is available bandwidth we are consuming that is not available to the system for other purposes. Furthermore, the subscription data is only consumed by tooling for CAN systems. Perhaps we still need a service that enables/disables these broadcasts?

I vote for keeping it as it is. Perhaps a reclassification of the ranges would help? Something like the first 8192 belong to the standard space of subject identifiers and the remaining belong to an “extended” set?

Having considered the implications, I am inclined to revise the subject-ID range by dropping the two most significant bits. My motivation is based on the following observations:

-

The state-space of a safety-critical system should be minimized in the interest of simplifying the analysis and verification. This applies to the subject-ID space because even though at a first glance it has no direct manifestation anywhere outside of the message routing, it indirectly affects other entities such as the introspection interface we are discussing. As a specific example, consider the subject list compression idea you introduced earlier that can be obviated completely by constraining the range.

-

I suspect that few realistic systems may require more than 8192 subjects per network. It then follows from the above that the excessive variability offered by [0, 32768) may be harmful.

-

Shall the need arise to review the range in the future after the beta is out, it will only be possible to do so towards the expansion of the range rather than its contraction.

We can discuss it further at the dev call today. If this proposal is accepted, we will have exactly two remaining items to correct before the beta is published.

But let’s explicitly reserve the right to increase the subject-ID range in a future revision of the protocol.

UAVCAN/UDP multicasting & IGMP

Last weekend I was working on my experimental UAVCAN-based Yukon backend PoC. While doing that, I ventured to review the idea of employing IP multicasting for the experimental UAVCAN/UDP transport instead of broadcasting (it was partly inspired by the realization that with the broadcast-based approach, there may be at most one network operating on the loopback interface). The original proposal (in the OP post here) closely resembles AFDX, where the switching logic is intended to be configured statically. Later, in “Stabilizing uavcan.node.port.List.0.1, introspection, and switched networks”, I briefly outlined the idea of an IMGP-like auto-configuration logic where the switch is to automatically construct the distribution tree by snooping on the port list messages published by network participants. Further inquiry into this subject revealed that the IMGP protocol itself does not appear to be inherently incompatible with real-time or functional safety requirements, and it is possible to replace its inherently dynamic behaviors with static pre-configuration if such is preferred in a highly deterministic setting.

It is worth pointing out that not all networking equipment provides full support for IMGP. It is common for simpler switching hardware to handle multicast traffic by simply rebroadcasting multicast packets into every output port, which is still technically compatible with the formal specification of IP multicast. This serves to demonstrate that a multicast-based solution can be considered as a special case of the original broadcast-based one and that in highly deterministic settings it is possible to intentionally disable the dynamic behaviors introduced by IGMP in favor of static configuration on the switch, similar to AFDX.

Therefore, the advantage of this updated multicast proposal is that it is capable of efficient utilization of the network bandwidth using standard COTS hardware without the need for any UAVCAN-specific traffic handling policies, while still being compatible with fully static configurations shall that be preferred. It should also be noted that the question of reliable delivery that frequently arises in relation to multicasting is addressed by 1. the basic assumption that the underlying transport network provides a guaranteed service level and 2. the deterministic data loss mitigation method can be applied to manage spurious data losses caused by external factors like interference.

This proposal is focused on IPv4 because I expect that the advantages of IPv6 are less relevant in an intravehicular setting. Nevertheless, due to the similarities between v4 and v6, it is trivial to port this proposal to IPv6 shall the need arise. The differences will be confined to the specific definitions of IP addresses and some related numbers, while the general principles will remain unchanged.

The changes introduced by this proposal affect only nodes that subscribe to UAVCAN subjects. There is no effect on request/response interactions (because they are inherently unicast), and there is virtually no effect on publications because per RFC 1112, in order to emit a multicast packet, a limited level-1 implementation without the full support of IGMP and multicast-specific packet handling policies is sufficient.

The proposed here scheme of mapping a UAVCAN data-specifier to a multicast IP address (and the reverse) is static and unsophisticated. This is possible because the UAVCAN transport layer model is in itself very simple and it utilizes only compact numerical entity identifiers (instead of, say, textual topic names).

Without the need to rely on explicit broadcasting, netmask becomes irrelevant for node configuration (per the original proposal it was necessary for deducing the subnet broadcast address). In the interest of simplification, the new approach is to treat the 16 least significant bits of the IP address as the node-ID, which does not necessarily imply that the entire 65536 addresses are admissible (earlier it has been proposed to limit the range of node-IDs to [0, 4095], that still holds). This is both machine- and human-friendly, especially with IPv6, where the least significant hextet simply becomes the node-ID.

The following 7 bits of the IPv4 address are used to differentiate independent UAVCAN/UDP transport networks sharing the same IP network (e.g., multiple UAVCAN/UDP networks running on localhost or on some physical network). This is similar to the domain identifier in DDS. For clarity, this 7-bit value will be referred to as the subnet-ID; it is not used anywhere else in the protocol other than in the construction of the multicast group address, as will be shown below. The remaining 9 bits of the IPv4 address are not used. The reason why the width is chosen to be specifically 7 and 9 bits will be provided below.

Schematically, the IPv4 address of a node is structured as follows:

xxxxxxxx.xddddddd.nnnnnnnn.nnnnnnnn

\________/\_____/ \_______________/

(9 bits) (7 bits) (16 bits)

ignored UAVCAN/UDP UAVCAN/UDP

subnet-ID node-ID

Then, in order to provide means for publishers and subscribers to find each other’s endpoints statically (as per UAVCAN core design goals, dynamic discovery is inadmissible) while not conflicting with other UAVCAN/UDP subnets, the multicast group address for a given subnet and subject-ID is constructed as follows:

fixed in this

Specification reserved

(5 bits) (3 bits)

____ _

/ \ / \

11101111.0ddddddd.000sssss.ssssssss

\__/ \_____/ \____________/

(4 bits) (7 bits) (13 bits)

IPv4 UAVCAN/UDP UAVCAN/UDP

multicast subnet-ID subject-ID

prefix

\_______________________/

(23 bits)

collision-free multicast

addressing limit of

Ethernet MAC for IPv4

From the most significant bit to the least significant bit, the components are as follows:

-

IPv4 multicast prefix is defined by RFC 1112.

-

The following 5 bits are set to 0b11110 by this Specification. The motivation is as follows:

-

Setting the four least significant bits of the most significant byte to 0b1111 moves the address range into the administratively-scoped range (239.0.0.0/8, RFC 2365), which ensures that there may be no conflicts with well-known multicast groups.

-

Setting the most significant bit of the second octet to zero ensures that there may be no conflict with reserved sub-ranges within the administratively-scoped range. The resulting range 239.0.0.0/9 is entirely ad-hoc defined.

-

Fixing the 5+4=9 most significant bits of the multicast group address ensures that the variability is confined to the 23 least significant bits of the address only, which is desirable because the IPv4 Ethernet MAC layer does not differentiate beyond the 23 least significant bits of the multicast group address (i.e., addresses that differ in the 9 MSb collide at the MAC layer, which is unacceptable in a real-time system) (RFC 1112 section 6.4). Without this limitation, an engineer deploying a network might inadvertently create a configuration that causes MAC-layer collisions which may be difficult to detect.

-

-

The following 7 bits (the least significant bits of the second octet) are used to differentiate independent UAVCAN/UDP networks sharing the same physical IP network. Since the 9 most significant bits of the node IP address are not represented in the multicast group address, nodes whose IP addresses differ only by the 9 MSb are not distinguished by UAVCAN/UDP. This limitation does not appear to be significant, though, because such configurations are easy to avoid. It follows that there may be up to 128 independent UAVCAN/UDP networks sharing the same IP subnet.

-

The following 16 bits define the data specifier:

- 3 bits reserved for future use.

- 13 bits represent the subject-ID as-is.

Publishers should use the TTL value of 16 by default, which is chosen as a sensible default suitable for any intravehicular network. Per RFC 1112, the default TTL is 1, which is unacceptable.

Examples

Node IP address: 01111111 00000010 00000000 00001000

127 2 0 8

Subject-ID: 00010 00101010

Multicast group: 11101111 00000010 00000010 00101010

239 2 2 42

Node IP address: 11000000 10101000 00000000 00000001

192 168 0 1

Subject-ID: 00010 00101010

Multicast group: 11101111 00101000 00000010 00101010

239 40 2 42

Additional background

- RFC 1112 (also see specs for IGMPv2/v3)

- RFC 2365

- DDS/RTPS specification

- https://tldp.org/HOWTO/Multicast-HOWTO-6.html

- [Russian] https://habr.com/ru/company/cbs/blog/309486/

- ZeroMQ multicast UDP implementation

Socket API demo

Here is a trivial send-receive demo used to test the Berkeley socket API:

#!/usr/bin/env python3

import socket

MULTICAST_GROUP = '239.1.1.1'

#IFACE = '127.1.23.123'

IFACE = '192.168.1.200'

sock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM, socket.IPPROTO_UDP)

sock.setsockopt(socket.SOL_SOCKET, socket.SO_REUSEADDR, 1)

# This is necessary on GNU/Linux, see https://habr.com/ru/post/141021/

sock.bind((MULTICAST_GROUP, 16384))

# Note that using INADDR_ANY in IP_ADD_MEMBERSHIP doesn't actually mean "any",

# it means "choose one automatically": https://tldp.org/HOWTO/Multicast-HOWTO-6.html

sock.setsockopt(socket.IPPROTO_IP, socket.IP_ADD_MEMBERSHIP,

socket.inet_aton(MULTICAST_GROUP) + socket.inet_aton(IFACE))

while 1:

print(sock.recvfrom(1024))

#!/usr/bin//env python3

import socket

#IFACE = '127.42.0.200'

IFACE = '192.168.1.200'

TTL = 16

"""

If not specified, defaults to 1 per RFC 1112.

"""

sock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM, socket.IPPROTO_UDP)

sock.setsockopt(socket.IPPROTO_IP, socket.IP_MULTICAST_TTL, TTL)

# https://tldp.org/HOWTO/Multicast-HOWTO-6.html

# https://stackoverflow.com/a/26988214/1007777

sock.setsockopt(socket.IPPROTO_IP, socket.IP_MULTICAST_IF, socket.inet_aton(IFACE))

sock.bind((IFACE, 0))

sock.sendto(b'Hello world! 1', ('239.1.1.1', 16384))

sock.sendto(b'Hello world! 2', ('239.1.1.2', 16384))

With multicasting in place, it is no longer necessary to segregate subjects by UDP ports; instead, a constant port number can be used. Suppose it could be 16383 (a value as good as any other, as long as it belongs to the ephemeral range and does not conflict with any popular well-known ports.

Service ports can continue using the original arrangement, except that instead of growing downward from a pre-defined base port, they should extend upward for simplicity.

The resulting arrangement is as follows:

- Subject port: 16383

- Service request port: (16384 + service_id * 2)

- Service response port: (16384 + service_id * 2 + 1)

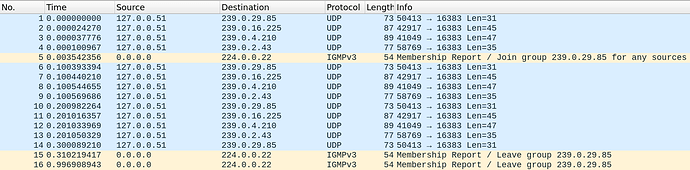

Here is a brief example I came across while testing my PoC implementation (currently on a branch named multicasting). Here we have a command (copy-pasted from the integration test suite):

$ pyuavcan -v pub 4321.uavcan.diagnostic.Record.1.1 '{severity: {value: 6}, timestamp: {microsecond: 123456}, text: "Hello world!"}' 1234.uavcan.diagnostic.Record.1.1 '{text: "Goodbye world."}' 555.uavcan.si.sample.temperature.Scalar.1.0 '{kelvin: 123.456}' --count=3 --period=0.1 --priority=slow --heartbeat-fields='{vendor_specific_status_code: 54}' --tr='UDP("127.0.0.51")'

2020-12-07 04:55:13 518515 INFO pyuavcan._cli.commands._subsystems.transport: Configuring the transport from command line arguments; environment variable PYUAVCAN_CLI_TRANSPORT is ignored

2020-12-07 04:55:13 518515 INFO pyuavcan._cli.commands._subsystems.transport: Resulting transport configuration: [UDPTransport(<udp anonymous="false" srv_mult="1" mtu="1200">127.0.0.51</udp>, ProtocolParameters(transfer_id_modulo=18446744073709551616, max_nodes=65535, mtu=1200), local_node_id=51)]

2020-12-07 04:55:13 518515 INFO pyuavcan._cli.commands.publish: Publication set: [Publication(uavcan.diagnostic.Record.1.1(timestamp=uavcan.time.SynchronizedTimestamp.1.0(microsecond=123456), severity=uavcan.diagnostic.Severity.1.0(value=6), text='Hello world!'), Publisher(dtype=uavcan.diagnostic.Record.1.1, transport_session=UDPOutputSession(OutputSessionSpecifier(data_specifier=MessageDataSpecifier(subject_id=4321), remote_node_id=None), PayloadMetadata(extent_bytes=300)))), Publication(uavcan.diagnostic.Record.1.1(timestamp=uavcan.time.SynchronizedTimestamp.1.0(microsecond=0), severity=uavcan.diagnostic.Severity.1.0(value=0), text='Goodbye world.'), Publisher(dtype=uavcan.diagnostic.Record.1.1, transport_session=UDPOutputSession(OutputSessionSpecifier(data_specifier=MessageDataSpecifier(subject_id=1234), remote_node_id=None), PayloadMetadata(extent_bytes=300)))), Publication(uavcan.si.sample.temperature.Scalar.1.0(timestamp=uavcan.time.SynchronizedTimestamp.1.0(microsecond=0), kelvin=123.456), Publisher(dtype=uavcan.si.sample.temperature.Scalar.1.0, transport_session=UDPOutputSession(OutputSessionSpecifier(data_specifier=MessageDataSpecifier(subject_id=555), remote_node_id=None), PayloadMetadata(extent_bytes=11))))]

2020-12-07 04:55:13 518515 INFO pyuavcan._cli.commands.publish: Publication cycle 1 of 3 completed; sleeping for 0.099 seconds

2020-12-07 04:55:13 518515 INFO pyuavcan._cli.commands.publish: Publication cycle 2 of 3 completed; sleeping for 0.098 seconds

2020-12-07 04:55:13 518515 INFO pyuavcan._cli.commands.publish: Publication cycle 3 of 3 completed; sleeping for 0.098 seconds

2020-12-07 04:55:13 518515 INFO pyuavcan._cli.commands.publish: UDPTransportStatistics(received_datagrams={MessageDataSpecifier(subject_id=7509): SocketReaderStatistics(accepted_datagrams={}, dropped_datagrams={51: 3}), ServiceDataSpecifier(service_id=430, role=<Role.REQUEST: 1>): SocketReaderStatistics(accepted_datagrams={}, dropped_datagrams={})})

2020-12-07 04:55:13 518515 INFO pyuavcan._cli.commands.publish: Subject 7509: SessionStatistics(transfers=3, frames=3, payload_bytes=21, errors=0, drops=0)

2020-12-07 04:55:13 518515 INFO pyuavcan._cli.commands.publish: Subject 4321: SessionStatistics(transfers=3, frames=3, payload_bytes=63, errors=0, drops=0)

2020-12-07 04:55:13 518515 INFO pyuavcan._cli.commands.publish: Subject 1234: SessionStatistics(transfers=3, frames=3, payload_bytes=69, errors=0, drops=0)

2020-12-07 04:55:13 518515 INFO pyuavcan._cli.commands.publish: Subject 555: SessionStatistics(transfers=3, frames=3, payload_bytes=33, errors=0, drops=0)

Shortly after launch the node subscribed to the heartbeat subject (for reasons of node-ID collision detection). Its subject-ID is 7509 = (29 << 8) | 85, which translates into 239.0.29.85. The command takes 300 ms to execute (3 message sets, interval 0.1 seconds). All transfers were single-frame transfers.

How about a simple practical example of running UAVCAN/UDP on a physical hybrid Ethernet + wireless network. I just ran this locally while testing the new multicast implementation I made in PyUAVCAN. Naturally, the implementation is reasonably covered by integration tests (test coverage ~80%) that execute both on Windows and GNU/Linux, but they use the loopback interface instead of a physical network (the latter is harder to set up in the CI environment, but we might get there one day). I wanted to see how my regular office-grade networking equipment handles local multicast traffic.

On the first computer, generate the DSDL definitions and launch the PyUAVCAN demo application. Don’t forget to change the hard-coded local IP address in the demo to something appropriate per your local network configuration (in my case it’s 192.168.1.21, this computer is connected over Wi-Fi):

uvc dsdl-gen-pkg ~/uavcan/pyuavcan/tests/dsdl/namespaces/sirius_cyber_corp

uvc dsdl-gen-pkg ~/uavcan/pyuavcan/tests/public_regulated_data_types/uavcan

basic_usage.py

You may want to also launch Wireshark on either computer to see what’s happening on the network. Although, on the other hand, being stateless and simple, UAVCAN does not generate much interesting traffic (aside from, perhaps, IGMP and deterministic packet loss mitigation).

On the other computer, generate the same DSDL definitions as well, and configure the transport for the CLI tool via the environment variable (it is also possible to use the command line arguments, but I find it to be less convenient):

uvc dsdl-gen-pkg ~/uavcan/pyuavcan/tests/dsdl/namespaces/sirius_cyber_corp

uvc dsdl-gen-pkg ~/uavcan/pyuavcan/tests/public_regulated_data_types/uavcan

export PYUAVCAN_CLI_TRANSPORT='UDP("192.168.1.200",anonymous=True)'

Make sure we can see the heartbeats from the other node (option -M is to see transfer metadata):

$ uvc sub uavcan.node.Heartbeat.1.0 -M

---

7509:

_metadata_:

timestamp:

system: 1607803261.129515

monotonic: 687025.357389

priority: nominal

transfer_id: 962

source_node_id: 277

uptime: 962

health:

value: 0

mode:

value: 0

vendor_specific_status_code: 74

...

Yup. Let’s stop the heartbeat subscriber and listen to the log messages instead (add -M if you need to see which node each message is coming from):

uvc sub uavcan/diagnostic/Record_1_1

(notice that the CLI tool understands different type name notations for better UX, so it is possible to rely on file system autocompletion)

Keeping that running, in a different terminal, configure the transport in the non-anonymous mode and make a service call to the remote node:

$ export PYUAVCAN_CLI_TRANSPORT='UDP("192.168.1.200")'

$ uvc call 277 123.sirius_cyber_corp.PerformLinearLeastSquaresFit_1_0 '{points: [{x: 1, y: 1}, {x: 10, y: 30}]}'

---

123:

slope: 3.2222222222222223

y_intercept: -2.2222222222222214

(where 277 comes from 192.168.1.21 as (1 << 8) + 21)

So we have the response. Also, the remote node emitted a log message that we can see in the first terminal:

$ uvc sub uavcan.diagnostic.Record_1_1

---

8184:

timestamp:

microsecond: 0

severity:

value: 1

text: Least squares request from 456 time=1607803038.091053493 tid=0 prio=4

---

8184:

timestamp:

microsecond: 0

severity:

value: 2

text: 'Solution for (1.0,1.0),(10.0,30.0): 3.2222222222222223, -2.2222222222222214'