Stabilizing uavcan.node.port.List.0.1, introspection, and switched networks

In the interest of advancing the DS-015 effort, I was working on the set of optional UAVCAN application-level capabilities that are to be made mandatory by DS-015. That forced me to return to the long-postponed issue of stabilizing the port discovery services under uavcan.node.port. (which are, as of today, bear the version number 0.1), particularly the listing service uavcan.node.port.List. Currently, the service is implemented using the pull model, where the inspected node is to respond with a list of the subject- or service-identifiers when requested by the caller.

My recent work on the Interface Design Guidelines brought it to my attention that the design of this particular service (as in architecture, not UAVCAN-service) is not in perfect alignment with our guidelines. Specifically, the pull model does not provide means of notifying inspectors of a change in the pub/sub configuration on the inspected node, and introduces a certain degree of statefulness into the service that is easily avoidable. Additionally, leaving the decision on when to invoke the service whose handling may be time-consuming to external agents complicates the implementation of robust and predictable scheduling on the local node. These observations prompted me to consider replacing the port list service with a subject (still optional, of course) that is to be published periodically and on-change to announce the current subscription configuration of the local node. The publisher configuration can be subjected to the same treatment but it is not immediately required by the application-level objectives at hand, and as such, this part of the problem can be postponed indefinitely – after all, the publication set is always trivially observable on the network by means of mere packet monitoring, which is not the case for the subscription configuration.

The introspection I am speaking about here is vital for the facilitation of the advanced diagnostic tools such as Yukon with its Canvas (shown below), which would be unable to display any meaningful interconnection information without being able to detect not only outputs but also inputs.

Beyond introspection, this capability is important in switched network transports for automatic configuration of the switching logic. As I briefly touched in the OP post, specialized AFDX switches implement routing and network policy enforcement that are to be configured statically at the system definition time (practical installations of AFDX may employ rigid time schedules generated with the help of automatic theorem provers); acting as the traffic policy enforcer, an AFDX switch is able to confine the fault propagation should one of its ports be affected by a non-conformant emitter (the so-called babbling idiot failure). The output ports for incoming frames are selected based on the static configuration of virtual links; to avoid delving into the details of that technology, this can be thought of as a static switching table.

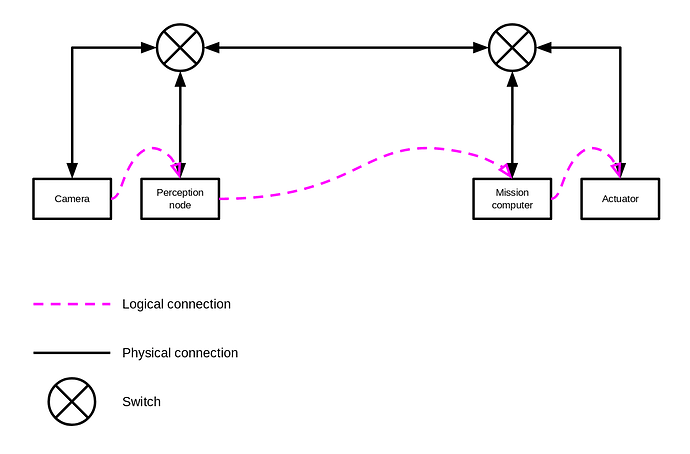

In the UAVCAN/UDP broadcast model discussed in the OP post, the output port contention and the resulting latency issues are proposed to be managed by statically configuring L2 filters at the switch such that the data that is not relevant at the given port is to be dropped by the switch, thus reducing the latency bound of the relevant data. The following synthetic example illustrates the point – suppose that the camera node generates significant traffic that is not desired beyond the left switch, and the perception node generates low traffic destined towards the mission computer node where the latency is critical:

If the output ports were to be left unfiltered, the traffic from the camera would have been propagated towards the right-side subnet, increasing the output port contention and the latency envelope throughout.

The static L2-filter configuration is efficient at managing the port contention issues if we consider its technical merits only, but it creates issues for quickly evolving applications with lower DAL/ASIL levels where the need to reconfigure the switch (or the excessive rebroadcasting that would result if no filtering is configured) may create undue adoption obstacles.

Drawing upon the theory explained in Safety and Certification Approaches for Ethernet-Based Aviation Databuses [Yann-Hang Lee et al, 2005], section 5.2 Deterministic message transmission in switched network, it is apparently trivial to define a parallel output-queued (POQ) specialized switch that is able to derive the output port filtering policy automatically by merely observing the subscription information arriving from said port regardless of the number of hops and the branching beyond the port. I will leave this as a note to my future self to expand upon this idea later because these technicalities are not needed in this discussion.

The described ideas rely on a compact representation of the entirety of the subscription state of a given node in one message. Let the type be named uavcan.node.port.Subscription. There are a few obvious approaches here.

- Bitmask-based. Given the subject-ID space of 2^{15} elements, the required memory footprint is exactly 4096 bytes.

# Node subscription information.

# This message announces the interest of the publishing node in a particular set of subjects.

# The objective of this message is to facilitate automatic filtering in switched networks and network introspection.

# Nodes should publish this message periodically at the recommended rate of ~1 Hz at the priority level ~SLOW.

# Additionally, nodes are recommended to publish this message whenever the subscription set is modified.

uint8 SUBJECT_ID_BIT_LENGTH = 15

bool[2 ** SUBJECT_ID_BIT_LENGTH] subject_id_mask

# The bit at index X is set if the node is interested in receiving messages of subject-ID X.

# Otherwise, the bit is cleared.

- List-based with inversion. The worst-case size is substantially higher – over 32 KiB. The advantage of this approach is that low-complexity nodes will not be burdened unnecessarily with managing large outgoing transfers since their subscription lists are likely to be very short. The disadvantage is the comparatively high network throughput (albeit at a low priority) being utilized for mere ancillary functions.

# Node subscription information.

# This message announces the interest of the publishing node in a particular set of subjects.

# The objective of this message is to facilitate automatic filtering in switched networks and network introspection.

# Nodes should publish this message periodically at the recommended rate of ~1 Hz at the priority level ~SLOW.

# Additionally, nodes are recommended to publish this message whenever the subscription set is modified.

@union

uint16 CAPACITY = 2 ** 15 / 2

uavcan.node.port.SubjectID.1.0[CAPACITY] subscribed_subject_ids

# If this option is chosen, the message contains the actual subject-IDs the node is interested in.

uavcan.node.port.SubjectID.1.0[CAPACITY] not_subscribed_subject_ids

# If this option is chosen, the message contains the inverted list: subject-IDs that the node is NOT interested in.

The service ports can be efficiently represented like #1 in the same message because of their limited ID space, shall that be shown to be necessary:

bool[512] server_service_id_mask

bool[512] client_service_id_mask

Is it practical to consider the further restriction of the subject-ID space, assuming that it is to be done without affecting the compatibility of any existing UAVCAN/CAN v1 systems out there?

Assembling the packet, opening and closing the port is all fine. sigh Been banging my head on that one for a couple of weeks now, digging ever deeper into the APIs.

Assembling the packet, opening and closing the port is all fine. sigh Been banging my head on that one for a couple of weeks now, digging ever deeper into the APIs. (if you don’t count the WiFi AP)

(if you don’t count the WiFi AP)