Hey Vadim. We are certainly open to new proposals since this is an ongoing design process and the existing draft is expected to be suboptimal.

The core requirements presented to the serial encapsulation format are transparency and implementation simplicity. The simplicity requirement is probably self-evident and requires no further explanation – the simplicity of the whole protocol is one of its core design goals.

The communication channel transparency here is to be understood as the lack of coupling between the probability of a failure occurring in the communication channel and the data exchanged over the channel. We define “failure” as a temporary disruption of communication, undetected data corruption, or misinterpretation of data.

The designer of a high-integrity system has to perform a comprehensive analysis of the failure modes to prove the behavioral properties of the system. It is convenient to approach the task by assuming that there is a malicious agent attempting to subvert the system, even though the problem is not related to security in general.

If you adopt that mindset, you can quickly observe that any type of communication channel that does not offer robust separation between data and control symbols is non-transparent because it is always possible to construct such a data symbol sequence that can be interpreted as a control sequence. Embed a valid frame into another frame and evaluate if the former frame is represented as a valid frame on the communication channel; if yes, the protocol can be subverted by invalidating the control symbols of the outer frame, causing the parser state machine to interpret (part of) the data as a control sequence.

For example, suppose a frame is defined as (this is a very common pattern): (control-symbol, length, payload). Then, define payload as (control-symbol, length2, payload2). If the first control-symbol is missed, can the protocol state machine determine that the following symbols are to be ignored?

Another issue pertaining to transparency is fault recovery. An encapsulation that does not offer robust segregation of control symbols may exhibit poor worst-case failure recovery time if the parser state machine mislabels incoming control symbols as data or vice versa due to data corruption (or malicious activity of the sender).

For example, suppose that in the above example the length prefix is damaged to indicate a very long value, resulting in the parser state machine incapacitating the communication channel until either the expected length is traversed or a failure recovery heuristic is triggered. Such heuristics tend to introduce unduly constraints upon the protocol and complicate the analysis of the system so they are to be avoided.

One might exclaim that the last failure case is addressable with recursive parsers, and it is true, but it does not help against the first issue and recursive parsers are hard to implement in a system whose resource consumption has to be tightly bounded.

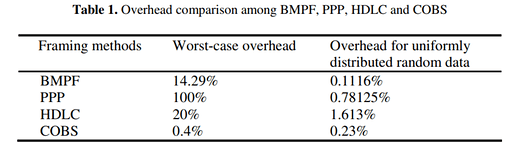

As mentioned in the PyUAVCAN documentation, consistent overhead byte stuffing (COBS) may be leveraged as a means for implementing transparent channels while keeping the overhead low, but this approach suffers from poor error recovery characteristics (what happens if a large-valued data symbol is misinterpreted as a COBS control symbol or a correctly placed COBS symbol is corrupted such that it indicates a large skip distance?)

You might be tempted to think that these considerations have an academic feel and are hardly relevant in practical applications. This is not true. First, as I mentioned already, if you are dealing with a high-integrity system then fringe cases have to be analyzed. Second, a sufficiently noisy channel may occasionally trigger failure cases in very real applications leading to potentially dangerous circumstances; for example, you may have seen already the discussion related to the VESC motor control protocol whose poor design may cause safety issues.

Non-transparent encapsulation formats are still used successfully in many applications where a high degree of functional safety is not required or the communication medium is assumed to be robust, such as GNSS hardware (e.g., RTCM, u-blox), communication modems (e.g., XBee), etc. Assumptions that are valid in such applications do not hold for UAVCAN.

If you look at protocols designed for high-reliability applications, you will see that they implement transparent channels virtually universally. The commonly-known MODBUS RS-485 comes to mind, where the control-data segregation is implemented with the help of strict timing requirements (impractical for UAVCAN). DDS/XRCE offers a serial transport design recommendation which is based on HDLC as well, for the same reasons.

The statement “byte stuffing approach is not DMA friendly” is true but the whole truth is “stream-oriented serial interfaces like UART are not DMA friendly”. Either we have to live with that or I am missing something, in which case please correct me.