Differential pressure sensor demo

@tridge It is great that you have moved on to analyze other parts of DS-015, but I would like to reach some sort of conclusion regarding the airspeed sensor node (mind the difference: not an air data computer, so not an idiomatic DS-015) before switching the topic. I proposed that we construct a very simple demo based on your inputs. I did that yesterday; please, do have a look (@scottdixon also suggested that we make the repository public, so it is now public):

I hope this demo will be a sufficient illustration of my proposition that DS-015 can be stretched to accommodate your requirements (at least in this case for now). In fact, as it stands, the demo does not actually leverage any data types from the reg.drone namespace, not that it was an objective to avoid it.

May I suggest that you run it locally using Yakut and poke it around a little bit? I have to note though that Yakut is a rather new tool; if you run into any issues during its installation, please, open a ticket, and sorry for any inconvenience. You may notice that the register read/write command is terribly verbose, that’s true; I should improve this experience soon (this is, by the way, the part that can be automated if the aforementioned plug-and-play auto-configuration proposal is implemented).

We can easily construct additional demos as visual aids for this discussion (it only takes an hour or two).

Goals and motivation

I risk repeating myself again here since this topic was covered in the Guide, but please bear with me — I don’t want any accidental misunderstanding to poison this conversation further.

My reference to Torvalds vs. Tanenbaum was to illustrate the general scope of the debate, not to recycle the arguments from a different domain. We both know that distributed systems are commonly leveraged in state-of-the-art robotics and ICT. I am not sure if one can confidently claim that “distributed systems won” (I’m not even sure what would that mean exactly), but I think we can easily agree that there exists a fair share of applications where they are superior. Avionics is one of them. Robotic systems are another decent example — look at the tremendous ecosystem built around ROS!

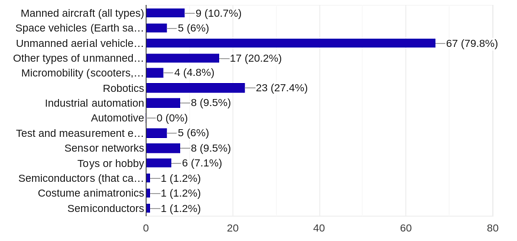

It is my aspiration (maybe an overly ambitious one? I guess we’ll see) to enable a similar ecosystem, spanning a large set of closely related domains from avionics to robotics and more, with the help of UAVCAN. It is already being leveraged in multiple domains, although light unmanned aviation remains, by far, the largest adopter (this survey was also heavily affected by a selection bias, so the numbers are only crude approximations):

Requirements to LRU and software components between many of these domains overlap to a significant extent. It is, therefore, possible to let engineers and researchers working in any of these fields be able to rely on the advances made in the adjacent areas. I am certain that many business-minded people who are following this conversation will recognize the benefits of this.

UAVCAN v1 is perfectly able to serve as the foundation for such an inter-domain ecosystem, but it will only succeed if we make the first steps right and position it properly from the start. One of the preconditions is that we align its design philosophy with the expectations of modern-day experts, many of whom are well-versed in software engineering. This is not surprising, considering that just about every sufficiently complex automation system being developed today — whether vehicular, robotic, industrial — is software-defined.

The idea of UAVCAN becoming a technology that endorses and propagates flawed design practices like the specimen below keeps me up at night. I take full responsibility for it because an engineer working with UAVCAN v0 simply does not have access to adequate tools to produce good designs.

You might say that a man is always free to shoot himself in the foot, no matter how great the tool is. But as a provider of said tool, I am responsible to do my part at raising the sanity waterline, if only by a notch. Hence the extensive design guide, philosophy, tropes, ideologies, and opinionated best practices. Being a mere human, I might occasionally get carried away and produce overly idealistic proposals, which is why I depend on you and other adopters to keep the hard business objectives in sight. My experience with the PX4 leadership indicates that it is always possible to find a compromise between immediate interests and long-term benefits of a cleaner architecture by way of sensible and respectful dialogue.

Your last few posts look a bit troubling, as they appear to contain critique directed at your original interpretation of the standard while not taking into account the corrections that I introduced over the course of this conversation. Perhaps the clarity of expression is not my strong suit. The one thing that troubles me most is that you appear to be evaluating DS-015 as a sensor network rather than what it really is. I hope the demos will make things clearer.

On GNSS service

I think I understand where you are coming from. We had a lengthy conversation a year and a half ago about the deficiencies of the v0 application layer, where we agreed it could be improved; you went on to list the specifics. I suppose I spent enough time tinkering with v0 and its many applications to produce a naïve design that would exactly match your (and many other adopters) expectations. Instead, I made DS-015. Why?

When embarking on any non-trivial project, one starts by asking “what are the business requirements” and “what are the constraints”, then apply constrained optimization to approximate the former. With DS-015, the requirements (publicly visible subset thereof) can be seen here: DS-015 MVP progress tracking. One specific constraint was that it is to be possible to deploy a basic DS-015 configuration on a small UAV equipped with a 1 Mbps Classic CAN bus. If the constraint is satisfied and the business requirements are met, the design is acceptable. I suppose it makes sense.

One might instead maximize an arbitrary parameter without regard for other aspects of the design. For example, it could be the bus utilization, data transfer latency, flash space, how many lines of code one needs to write to bring up a working system, et cetera. Such blind optimization is mostly reminiscent of games or hobby projects, where the process of optimization is the goal in itself. This is not how engineering works.

At 10 Hz, the example 57-byte message you’ve shown requires 57 bytes * 10 Hz = 570 bytes per second of bandwidth, or 90 Classic CAN frames per second. At 1 Mbps, the resulting worst-case bus load would be about 1%.

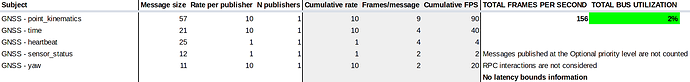

At the same 10 Hz, the DS-015 GNSS service along with a separate yaw message requires 156 frames per second, thereby loading the bus by 2%:

The yaw is to be represented using uavcan.si.sample.angle.Scalar. The kinematic state message is intended only for publishers that are able to estimate the full kinematic state, which is not the case in this example.

Is DS-015 less efficient? Yes! It is about twice less efficient compared to your optimized message, or 1% less efficient, depending on how you squint. Should you care? I don’t think so. You would need to run just about 500 GNSS receivers on the bus to exhaust its throughput. Then you will be able to selectively disable subjects that your application doesn’t require to conserve more bandwidth (this is built into UAVCAN v1).

If you understand the benefits of service-oriented design in general (assuming idealized settings detached from our constraints), you might see then how this service definition is superior compared to your alternative, while having negligible cost in terms of bandwidth. I, however, should stop making references to the Guide, where this point is explained sufficiently well.

I should also address your note about double timestamping in reg.drone.physics.time.TAI64VarTs. In robotics, it is commonly required to map various sensor feeds, data transfers, and events on a shared time system — this enables complex distributed activities. In UAVCAN, we call it “synchronized time”. Said time may be arbitrarily chosen as long as all network participants agree about it. In PX4-based systems (maybe this is also true for ArduPilot?), this time is usually the autopilot’s own monotonic clock. In more complex systems like ROS-based ones, this is usually the wall clock. Hence, this message represents the GNSS time in terms of the local distributed system’s time, which is actually a rather common design choice.

Timestamping of all sensor feeds also allows you to address the transport latency variations since each sample from time-sensitive sensor feeds comes with an explicit timestamp that is invariant to the transmission latency.

Regarding the extra timestamp in reg.drone.physics.kinematics.translation.Velocity3Var: this is a defect. The nested type should have been uavcan.si.unit.velocity.Vector3 rather than uavcan.si.sample.velocity.Vector3. @coder_kalyan has already volunteered to fix this, thanks Kalyan.

As for the excessive variance states, you simply counted them incorrectly. This is understandable because crawling through the many files in the DS-015 namespace is unergonomic at best. The good news is that @bbworld1 is working to improve this experience (at the time of writing this, data type sizes reported by this tool may be a bit nonsensical, so beware):

https://bbworld1.gitlab.io/uavcan-documentation-example/reg/Namespace.html

It is hard not to notice that your posts are getting a bit agitated. I can relate. But do you not see how a hasty dismissal may have long-lasting negative consequences on the entire ecosystem? People like @proficnc, @joshwelsh, and other prominent members of the community look up to you to choose the correct direction for ArduPilot, and, by extension, for the entire world of light unmanned systems for a decade to come. We don’t want this conversation to end up in any irresponsible decisions being made, so let us please find a way to communicate more sensibly.

I don’t want to imply that the definitions we have are perfect and you are just reading them wrong. Sorry if it came out this way. I think they are, in fact, far from perfect (which is why the version numbers are v0.1, not v1.0), but the underlying principles are worth building upon.

Should we craft up and explore a GNSS demo along with the airspeed one?

Questions

Lastly, I should run through the following questions that appear to warrant special attention.

I implied no such thing. Sorry if I was unclear.

I agree this is useful in many scenarios, but the degree to which you can make the system self-configurable is obviously limited. By way of example, your existing v0 implementation is not fully auto-configurable either, otherwise, there would be no bits like this:

What I am actually suggesting is that we build the implementation gradually. We start with an MVP that takes a bit of dedication to set up correctly. Then we can apply autoconfiguration where necessary to improve the user experience. Said autoconfiguration does not need to require active collaboration from simple nodes, if you read the thread I linked.

Observe that the main product of the GNSS service is the set of kinematic states published over separate subjects. These subjects are completely abstracted from the means of estimating the vehicle’s pose/position. Whether it is UWB, VO, or any related technology, the output is representable using the same basic model.

I approve of your intent to move the conversation into a more constructive plane. Although before we propose any changes, we should first identify how exactly @tridge’s business requirements differ, and why. For example, it is not clear why the iTOW is actually necessary and how is it compatible with other GNSS out there aside of GPS; I suspect another case of an XY problem, but maybe we don’t have the full information yet (in which case I invite Andrew to share it).