Per the dev call, this is what I think we all agreed to

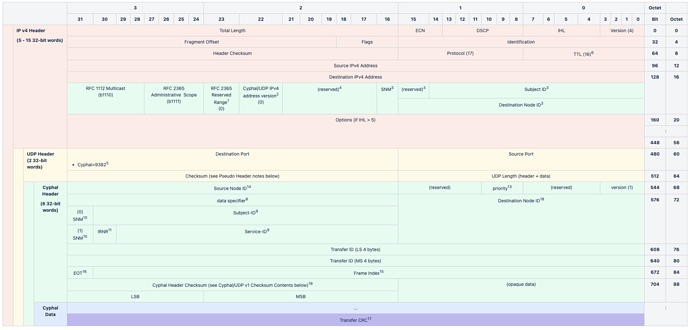

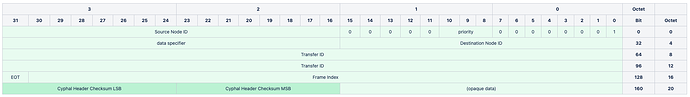

uint4 version # <- 1

void4

@assert _offset_ == {8}

uint3 priority # Duplicates QoS for ease of access; 0 -- highest, 7 -- lowest.

void5

@assert _offset_ == {16}

uint16 source_node_id

uint16 destination_node_id

uint16 data_specifier # Like in Cyphal/serial: subject-ID | (service-ID + request/response discriminator).

@assert _offset_ == {64}

uint64 transfer_id

@assert _offset_ == {128}

uint31 frame_index

bool end_of_transfer

uint16 user_data

# Opaque application-specific data with user-defined semantics. Generic implementations should ignore

@assert _offset_ % 16 == {0}

uint8[2] header_crc16_big_endian

@assert _offset_ / 8 == {24} # Fixed-size 24-byte header with natural alignment for each field ensured.

@sealed

- This is an optimization for UDP/IP on Ethernet. By limiting the multicast group ID to the least significant 23 bits, Ethernet hosts can avoid additional filtering responsibilities above layer 2.

- RFC. 2365, Section 6.2.1 reserves 239.0.0.0/10 and 239.64.0.0/10 for future use (because of footnote 1, Cyphal/UDP does not have access to the 239.128.0.0/10 scope). Cyphal/UDP uses this bit to isolate IP header version 0 traffic (note that the IP header version is not, necessarily, the same as the Cyphal Header version) to the 239.0.0.0/10 scope but we can enable the 239.64.0.0/10 scope in the future.

- SNM (Service, Not Message): If set then this is an RPC request or response and the 16 LSbs of the destination IP address is the full-range destination node identifier. If not set then the 15 LSbs of the destination IP address are a subject identifier for a pub/sub message and the 16th LSb is 0.

- Zero on transmit, discard on receipt unless zero.

- This is a temporary UDP port. We’ll register an official one later.

- Per RFC 1112, the default TTL is 1, which is unacceptable. Therefore, publishers should use the TTL value of 16 by default, which is chosen as a sensible default suitable for any intravehicular network.

- (comment removed)

- The data specifier is taken directly from Cyphal/Serial (pycyphal.transport.serial package — PyCyphal 1.15.0 documentation)

- If the SNM10 bit is set then this is a 10-bit service identifier with a 1-bit IRNR11 flag, otherwise it is a 13-bit subject identifier.

- SNM (Service, Not Message). Same value as found in the destination IP header (SNM3).

- IRNR (Is Request Not Response) if SNM10 is set.

- (comment removed)

- Like in CAN: 0 – highest priority, 7 – lowest priority. This data is duplicated from lower-layer QoS fields but provided in the Cyphal header to simplify transfer forwarding where the QoS data is not readily available above the transport layer.

- 0xFFFF == anonymous transfer

- The 31 bit frame index within the current transfer.

- EOT (End Of Transfer): if the most significant bit (31st) bit of the 32-bit frame index is set if the current frame is the last frame of the transfer.

- If EOT16 is set then this is the CRC of the reassembled transfer (header data excluded). This also applies to single frame transfers where EOT will always be set. In this case the CRC applies to just the single frame which is different than CAN where single transfers do not use a Cyphal CRC as they rely on the CAN CRC exclusively. Because the UDP checksum is weak the UDP version of Cyphal relies on the UDP checksum only as an optimization for multi-part transfers (where the CRC failure can catch an error before the transfer is reassembled and the strong Cyphal CRC is applied).

- 0xFFFF == broadcast

- Header CRC is CRC-16/CCITT-FALSE (aka CRC-16/AUTOSAR) and is encoded as a two-byte, big-endian integer.