The idea of profiles was poked by Scott a few months ago: Future Proposal: Featherweight Profile. Overall I think it’s probably a sensible direction to move towards but we have not yet accumulated the critical mass of alterations to justify breaking off a profile. Scott’s proposal is actually on the opposite side of the determinism/flexibility spectrum but the idea is the same.

That’s not quite true. The way you described it sounds like the resource utilization of the protocol stack is a function of the network configuration which is not something that can be robustly controlled or predicted by a given node. The protocol is intentionally designed to ensure that a properly constructed implementation (stack) can demonstrate predictable behaviors regardless of the network configuration. It is also a featured property of Libcanard (which is optimized for real-time systems).

The bootloader allocates a well-known set of transfer-ID counters statically and its memory footprint is not dependent on which interfaces are active or which of the tasks are being performed. Now, your case is different because certain base assumptions that the Specification makes about the underlying communication system are not met in your design (it’s too dynamic).

But setting the transfer-ID timeout to zero (which is related to transfer reception) does not automatically relieve you from the requirement to keep transfer-ID states for outgoing transfers. If you just used zero TID for outgoing transfers you would run into compatibility issues with third-party software and hardware. But the following approach is viable from the protocol design standpoint (the RAM issue notwithstanding):

I think you might consider removing least-recently-used TID counters automatically when the RAM resources are exhausted. It’s probably the solution that minimally departs from the Specification.

In the longer term, we should consider extending the Specification or introducing a profile (the latter is much more convoluted) based on your experiments here. We are watching you, Jeremy.

Out-of-order transfers shall be dropped. This is explicitly required by “Reassembled transfers shall form an ordered transfer sequence.” If you accept a transfer with an out-of-order TID, the set of transfers will not form an ordered sequence, hence a violation. Would you like to volunteer to add a footnote to clarify this, perhaps?

You don’t have to. Normally, in our applications, a node works non-stop until the system is shut down (if ever). See, the transfer-ID timeout has to be sized properly to suit the trade-off (section 4.1.2.4 Behaviors, non-normative blue box):

Low transfer-ID timeout values increase the risk of undetected transfer duplication when such transfers are significantly delayed due to network congestion, which is possible with very low-priority transfers when the network load is high.

High transfer-ID timeout values increase the risk of an undetected transfer loss when a remote node suffers a loss of state (e.g., due to a software reset).

I expect this to be specified once the UAVCAN/serial made it to the spec doc. The existing experimental implementation in PyUAVCAN timestamps by the first delimiter of the frame (the ambiguity is resolved retroactively) and I think it’s probably optimal because the delimiter is the first element of the frame. Timestamping at the end is undesirable because the payload transmission time (and its escaping, if any) would skew the timestamp.

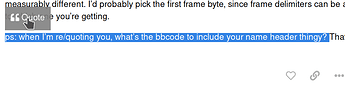

You can select the quoted text and then click “Quote”: